Predictive Processing

Why Expectation Matters for Movement and Pain

Predictive processing is a fascinating model for perception that I have been studying lately. In some ways it is very common sense and intuitive, and in others it is very challenging and mind expanding. Here’s a post describing what I’ve learned that I find interesting and practical.

Before getting into that, let’s review why any of this should be interesting to anyone concerned with movement and pain.

First, good movement requires good perception. The skill of moving your body with coordination is inseparable from the skill of perceiving where your body is in space and how it is moving. We perceive to move and move to perceive, and that is why we often say that great movers have amazing "body sense” or "proprioception."

Second, pain is in the nature of a perception. It depends on the brain’s interpretation about whether the body is in danger and what needs to be done to protect it. If your foot hurts, that means your brain perceives, rightly or wrongly, that it is damaged. Perceptions about the body (like anything else) can be mistaken, which is why we can have pain in areas that aren't damaged, and damage in areas that aren't painful. By learning more about the science of perception, we necessarily learn more about pain and how to treat it.

The Conventional Model For Perception: Bottoms Up

The conventional model of perception works roughly as follows. We collect sensory information through nerve endings in the eyes, ears, skin, muscles, etc. This information is relayed to the brain, which processes the information, interprets its meaning, and then creates a perception about the cause.

For example, when I see my wife’s face in front of me, this is because light bounced off her face, the pattern of the light was registered by my eyes and sent to my brain, which recognized the pattern as coming from my wife’s face, so it created the perception of her being there.

Here’s another example involving pain: if someone feels pain in their knee when they take a step, this is because the mechanical force of the step triggered nociception (nerve signals about potential damage), the signals reached the brain, the brain concluded the knee was under threat, and it created pain to encourage protection (maybe by limping).

This model is therefore very "bottom-up" or “outside-in.” It emphasizes the flow of information from the outside world to the periphery of the body, and then from the periphery to the brain.

What’s missing from this story? It doesn’t include any explanation of “top-down” factors that affect perception, such as past experience and expectation. This is where the predictive coding model adds value.

Predictive Coding: Expectation Matters

According to the predictive coding theory, the brain is always building and refining its representations or models of the outside world (and our bodies). Our perceptions depend in large part on these models, not just incoming sensory data.

For example, I have an internal model of my house that includes only one four-legged creature - my dog Levi. So if I walked through the living room in low lighting and glanced at a wolf, I would probably literally see my dog Levi. In other words, my perception would be determined by more by my expectations than by actual sensory data from my eyes.

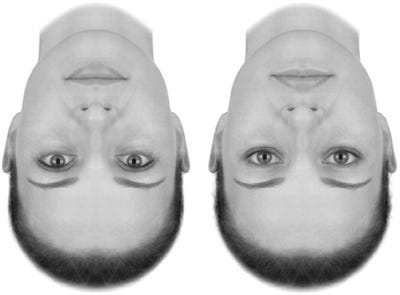

Check out the pictures below for some other examples of how expectation can affect perception.

In the first two images, you perceived something very different from what your eyes told you, based on your prior assumptions about how words are usually ordered or spelled. In the third picture, you saw two normal looking faces, based on your prior experiences with face parts being arranged in certain ways. (Turn the picture upside down to see a very different arrangement.)

This happens with many other kinds of sensations. If you think satanic messages are hidden in rock lyrics, you can hear them if you play Stairway to Heaven backwards. If you come up from behind someone and say "hot!" at the same time you put ice on their arm, they will feel heat. The painkilling effect of a placebo is based purely on the expectation that it will reduce pain. And nocebos work the opposite way - expecting pain can cause pain. To some extent, we perceive what we predict.

Comparing Top-Down to Bottom-Up

The predictive coding model has a great explanation for exactly how expectation affects perception. The nervous system is arranged in a hierarchical fashion with the brain cortexes at the top and nerve endings at the bottom. Higher levels of the nervous system are constantly “predicting” the incoming flow of sensory data from lower levels. These predictions create neural activity that flows downward (top-down) to meet incoming sensory data (bottom-up). When the meeting occurs , a comparison is made between what has been predicted and what has been sensed and this generates a prediction error.

If the error is relatively small, it is disregarded as being random noise or "close enough." Higher levels of the nervous system are not informed of their prediction errors, and the world is perceived exactly as expected. If the error is large, higher levels are notified of their mistake so they can update their model of the world. This creates a subjective feeling that something surprising or important has happened, and attention is automatically shifted to the incoming sensory data so that perception and action can be adjusted accordingly.

The strength or confidence of the prediction has a big effect on how prediction errors are treated. If the prediction about incoming sensory data is highly confident, (perhaps based on tons of past experience) even significant errors will get ignored. But if the prediction is not confident (perhaps because the context is novel and errors are anticipated), then bottom-up sensory information has a better chance of ascending to higher levels of the nervous system and causing changes in perception. Attention also matters for how prediction errors get processed. If I pay attention to a certain stream of sensory information, it increases the chance that small prediction errors will be noticed and not dismissed. The system can therefore bias perception in favor of top-down or bottom-up factors based on relative levels of confidence or attention to either one.

For example, according to my model of the world, the only black SUV in my garage is my car. If you switched it for another one, I would probably get in without even noticing. My perception would be controlled by expectation, not the raw data from my eyes. But I wouldn't suffer the same illusion in a crowded parking lot where I have the expectation that other black SUVs will be present, and therefore my perceptions would be controlled far more by bottom-up sensation than top-down prediction.

Now that we have a basic understanding of how this model works, let's look at how it explains some common and not so common phenomena related to perception.

Pain

The predictive coding framework helps explain why pain is affected by past experiences, thoughts, expectations and emotions, and not just tissue damage.

For example, if you have a long history of feeling pain with low back flexion, you will start to build an internal model that predicts pain with flexion. This will strongly bias you to feel pain each time you bend, even if the back isn't actually producing that much nociception.

You can reduce the contribution of top-down factors to your pain by updating the model of your back. To do this, you need to cause a prediction error by violating your expectation that bending will hurt. A good strategy would be to perform low back flexion in some novel way, perhaps in quadruped or supine, while paying attention to how it feels while bending, so that any predictions errors are not disregarded. That sounds like a high percentage of movement therapy in a nutshell.

A more aggressive and risky strategy would be to perform some movement where the back muscles have to work very hard to prevent flexion, say a heavy deadlift. Perhaps you do the deadlifts with good form to prevent flexion. It hurts a little, but nowhere near as much as you expected. In fact, you have a visceral feeling of surprise at how strong you feel. This is evidence that you have violated an expectation that your back was too weak and fragile to handle any significant force, and that your map for the back is being updated to account for the prediction error. Good sign!

The bottom line is this - a great deal of what can help with pain in the short term is violating an expectation that something will hurt. There's probably a lot of ways to do that – massage, deadlifts, cat cows, stretching, isometric resistance exercise, active or assisted joint mobility exercises. What they all have in common (if they help with pain) is that they don't hurt as much as you would expect.

Moving Better - Prediction and Action

According to the predictive coding model, there is a profound connection between perception and movement, because each can help correct a prediction error, and minimizing error is really all the system cares about. When the system is confronted with a prediction error, it can do one of two things - update models to reflect the new information (change perception), or alter action in a way that gathers sensory information consistent with the prediction (change movement).

For example, let’s say I am squatting to a box with my a barbell on my back. When I squat to a certain depth I expect sensory feedback from my butt indicating touchdown. But there is a prediction error – my butt is silent. I can do one of two things – I can change my perception about the location of the box (oops I forgot to put it in place!) or I could change my action - move my butt a bit lower or further back until I get the predicted feedback.

So one way or the other, the essential goal is always to reduce prediction error, and it doesn't really matter whether that is done by changing perception or action. The important thing is that I don’t crash to the ground with a barbell on my back. Either way, good internal models and good predictions are the basis for generating functional perceptions and actions.

Getting better at movement is therefore very much about improving your internal models for movement and your predictions for what kind of sensory feedback you will get during the movement. This means you need a lot of experience, you need to make mistakes, and you need to pay attention to the right streams of sensory information to identify and correct those mistakes through better perceptions and actions. Of course we know most of these things anyway, but I think it's cool to see that application of the predictive coding framework gets us to the right answers.

Here's some cool stuff that we might learn from predictive coding that we don't already know and is not easily explained by other models.

Schizophrenia, Autism and Babies

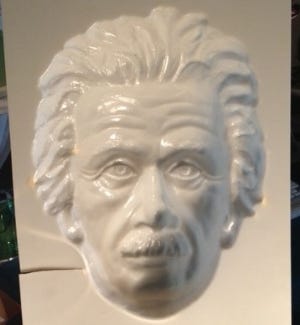

Check out this picture of Albert Einstein - is his nose closer to you or further away?

We expect noses to be closer to us, so most people will see this mask as being convex, when it is in fact concave. Interestingly, schizophrenics (and people stoned on marijuana) are actually less likely to make this mistake. This might be because their perceptions are controlled more by bottom-up sensation than top-down models of the world. And maybe this is why they both tend to experience paranoia.

Schizophrenia involves delusions where everyday events are regarded as incredibly salient. Imagine sitting in a crowded coffee shop and hearing your name in a nearby conversation. This might get your attention, but it would not probably register in your consciousness as being profoundly surprising. But if you had a problem whereby the relevance of unpredicted incoming sensory information was massively magnified, then the mention of your name might feel profoundly important, and perhaps contribute to delusions of reference or paranoia. So perhaps paranoid delusions involve assigning too much importance to minor errors in prediction.

Autism can be also be understood as a condition where bottom-up sensation dominates top-down predictions. Even the smallest prediction errors are considered important. Thus, all incoming sensory information is regarded as "newsworthy" and people with autism are "slaves to sensation", constantly distracted or irritated by minor inputs like labels on their clothes, or random noises.

Interestingly, people with autism often self-soothe by engaging in repetitive rhythmic movements. These create a stream of sensory information that is highly predictable. Better prediction allows the suppression of sensory information that would otherwise be overwhelming.

Maybe this is why babies like rhythmic movements, or to be carried around all the time, or to be swaddled. Because they don't have much experience in the world, they have no strong internal models to create confident predictions about their incoming sense data, and they just get blown away by all of the information they are getting about the unpredictable movements of their arms and legs, the variations in the way their back is touching the car seat, and the random nosies created by the TV, traffic, etc. Adults are exposed to all of this information too, but we can easily predict it and therefore ignore it. But for babies without good internal models of the world, everything is a blooming, buzzing confusion. Perhaps they are soothed by getting a nice stream of predictable rhythmic sensory information.

Aren't we all?

Lots of interesting food for thought here. Here are some further resources if you want to learn more.

Good articles on predictive coding

An Aberrant Precision Account of Autism

Prediction error minimization: Implications for Embodied Cognition and the Extended Mind Hypothesis

Active Interoceptive Inference and the Emotional Brain

The Hard Problem of Consciousness is a Distraction From the Real One

(Thanks to Derek Griffin and Mick Thacker for linking many of these.)

Articles from this Blog on Related Subjects

Andy Clark on Embodied Cognition and Extended Mind